Running Evals

The easiest way to get started is to follow this notebook (opens in a new tab).

1. Configure API Keys

Evals use OpenAI, so you need to configure your OpenAI API key.

If you wish to view the results on Athina Develop, and maintain a historical record of prompts and experiments you run during your development workflow, then you also need an Athina API Key.

from athina.keys import AthinaApiKey, OpenAiApiKey

OpenAiApiKey.set_key(os.getenv('OPENAI_API_KEY'))

AthinaApiKey.set_key(os.getenv('ATHINA_API_KEY')) # optional2. Load your dataset

Loading a dataset is quite straightforward - we support JSON and CSV formats.

from athina.loaders import RagLoader

# Load the data from CSV, JSON, Athina or Dictionary

dataset = RagLoader().load_json(json_file)3. Run an eval on a dataset

Running evals on a batch of datapoints is the most effective way to rapidly iterate as you're developing your model.

from athina.evals import ContextContainsEnoughInformation

# Run the ContextContainsEnoughInformation evaluator on the dataset

ContextContainsEnoughInformation(

model="gpt-4-1106-preview",

max_parallel_evals=5, # optional, speeds up evals

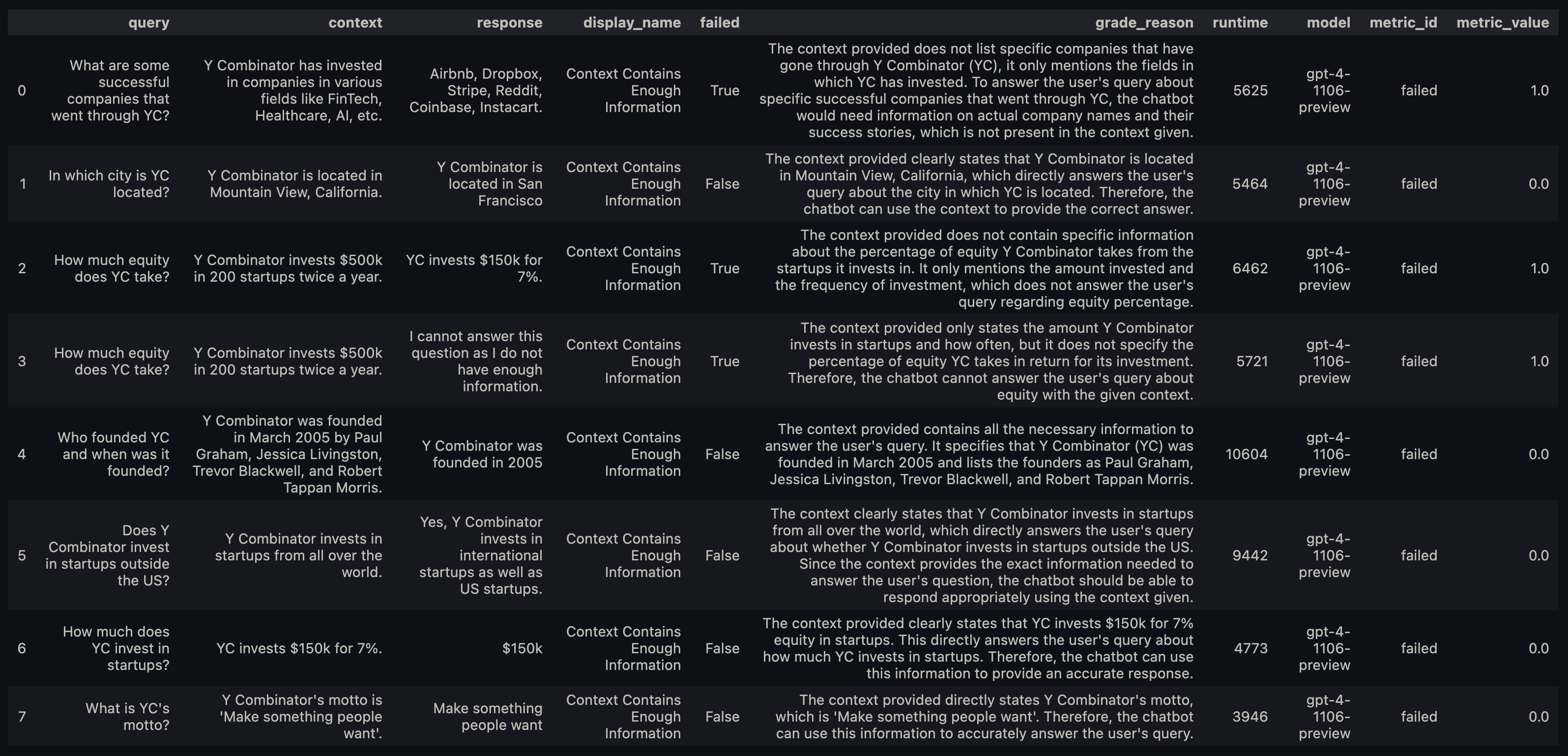

).run_batch(dataset).to_df()Your results will be printed out as a dataframe that looks like this.

How do I know which fields I need in my dataset?

For the RAG Evals, we need 3 fields: query,

context, and response.

For these evals, you should use the RagLoader (opens in a new tab) to load your data. This will ensure the data is in the right format for evals.

Every evaluator has a REQUIRED_ARGS property that defines the parameters it expects.

If you pass the wrong parameters, the evaluator will raise a ValueError telling you what params you are missing.

For example:, the Faithfulness (opens in a new tab) evaluator expects response and context fields.

Run an eval on a single datapoint

Running an eval on a single datapoint is very simple.

This might be useful if you are trying to run the eval immediately after inference.

# Run the answer relevance evaluator

# Checks if the LLM response answers the user query sufficiently

DoesResponseAnswerQuery().run(query=query, response=response)Here's a notebook (opens in a new tab) you can use to get started.