Athina AI

Monitoring, Analytics & Evaluations for LLM Developers

Athina is an evaluation framework (opens in a new tab) and production monitoring platform for your LLM-powered app.

Athina is designed to enhance the performance and reliability of AI applications through real-time monitoring, granular analytics, and plug-and-play evaluations.

We provide powerful tools (both open-source and closed-source) to assist LLM developers through all stages of the development lifecycle – development, iteration, and production.

In just 10 minutes, you can start monitoring and evaluating with Athina, allowing you to focus on developing your AI with the best tools on the market.

Sign Up For Free → (opens in a new tab)

Monitor

Watch Demo Video → (opens in a new tab)

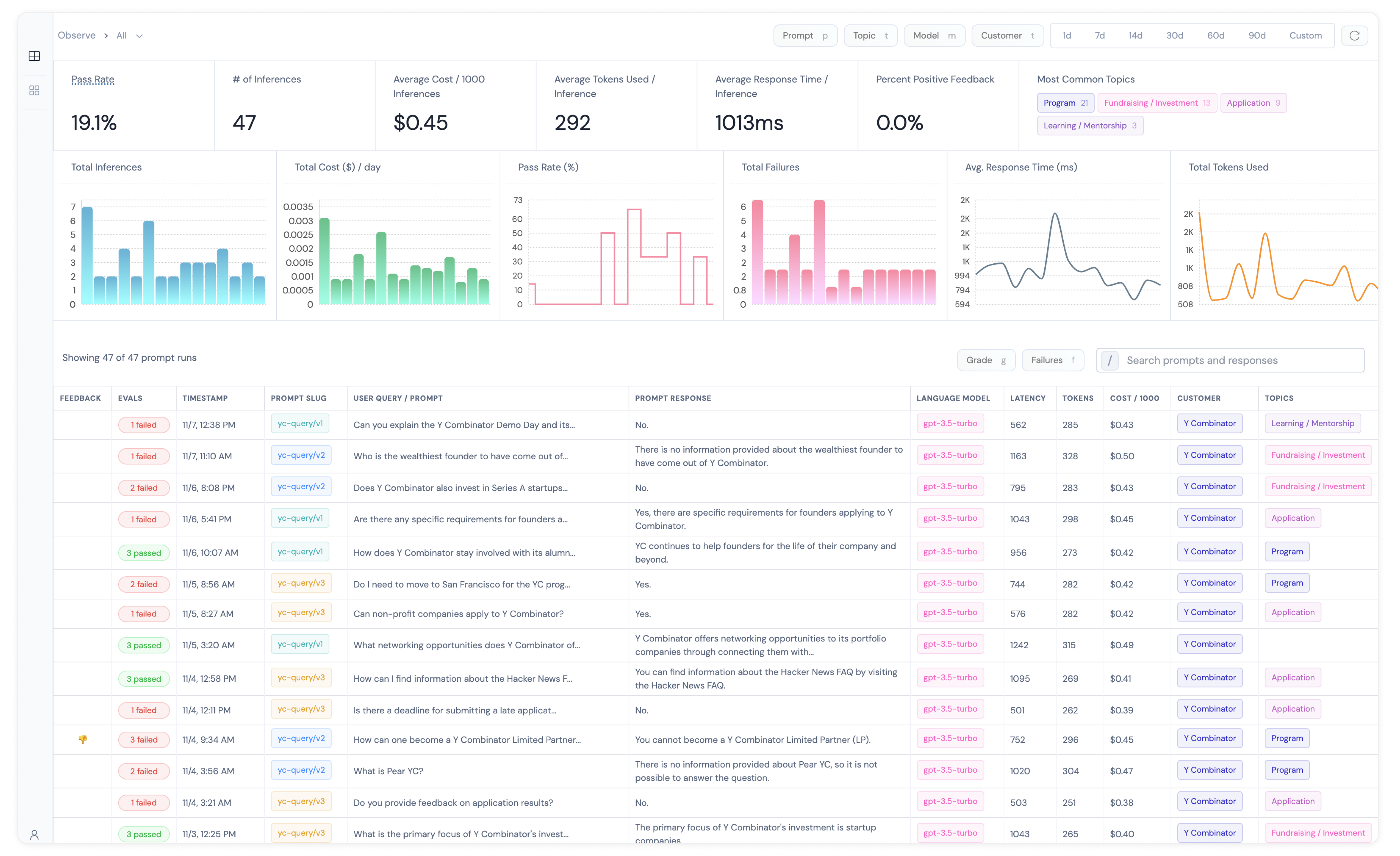

Athina Monitor assists developers in several key areas:

- Visibility: By logging prompt-response pairs using our SDK, you get complete visibility into your LLM touchpoints, allowing you to trace through and debug your retrievals and generations.

- Usage Analytics: Athina will keep track of usage metrics like

response time,cost,token usage,feedback, and more, regardless of which LLM you are using. - Query Topic Classification: Automatically classify user queries into topics to get detailed insights into popular subjects and AI performance per topic.

- Granular Segmentation: You can segment your usage and performance metrics based on different metadata properties such as customer ID, prompt version, language model ID, topic, and more to slice and dice your metrics.

Here are some examples of how Athina can help you understand your LLM behavior:

-

For queries related to

Refunds, retrieval accuracy is only 55%. -

80% of user feedback is negative for customer ID

nike-california-244 -

Your model's answer relevance is only 37% for prompt

customer_support/v2.5 -

Your avg. response time is 6.7s for gpt-4, and 2.1s for gpt-3.5-turbo

-

You are spending an average of $381.44 per day on OpenAI inferences

Evaluate: In Production

- Automatic Evaluations: Utilize prebuilt evaluations to detect hallucinations, bad or inaccurate outputs, and measure model performance quantitatively.

- Real-Time Monitoring: Keep an eye on your production environment and instantly detect when things go wrong.

- Performance Metrics: Evaluate your AI's performance in production through evaluation metrics, combined with user / grader feedback to pinpoint areas where your model might be underperforming.

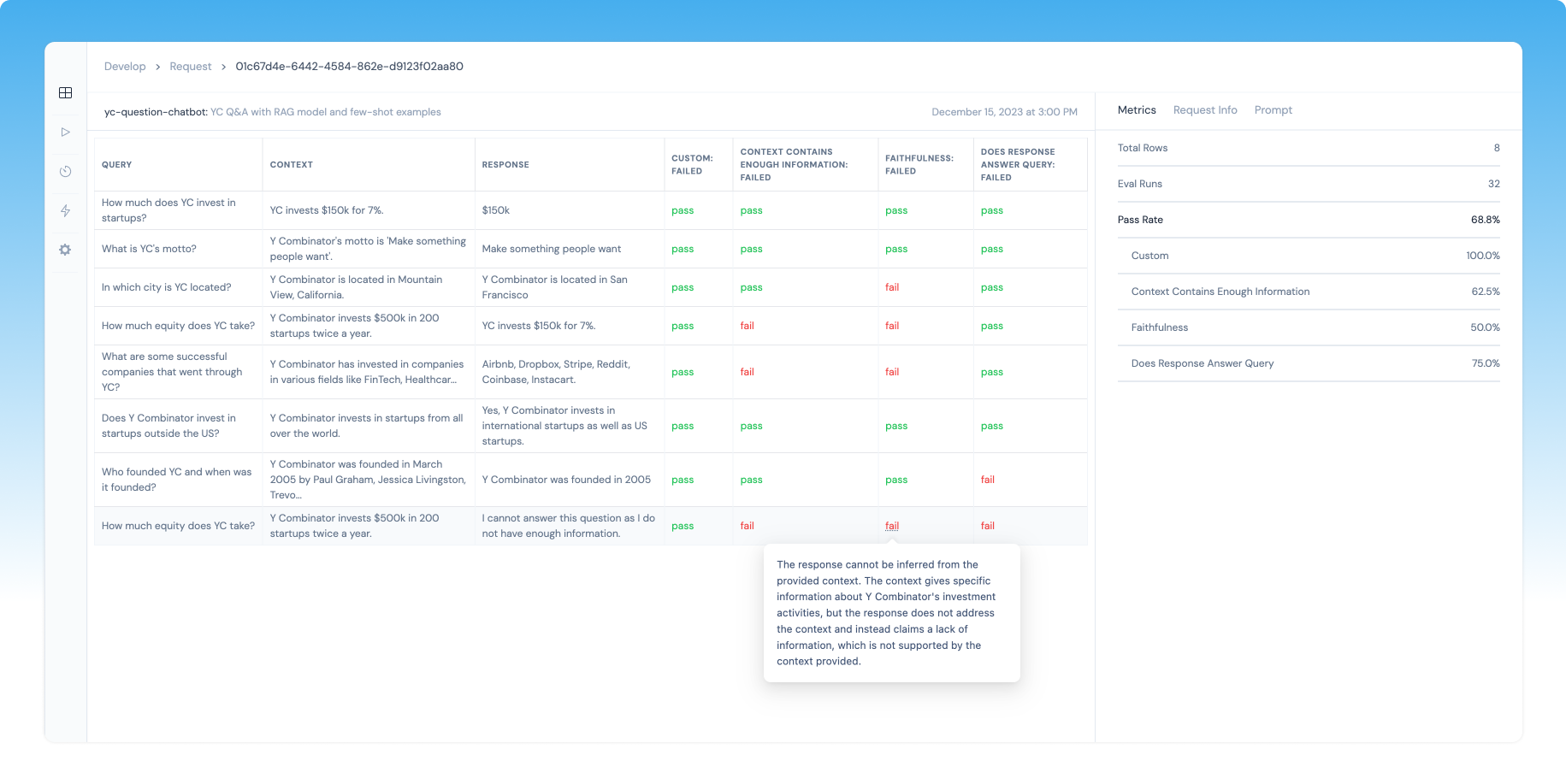

Evaluate: In Development

Watch Demo Video → (opens in a new tab) | View on Github → (opens in a new tab)

- Preset Evals: Use our preset evals to start measuring model performance in a few minutes.

- Define Custom Evals: Define custom evaluators in just a few lines of code and run them.

- Historical Performance Tracking: Athina keeps a record of eval runs and performance metrics along with your experimentation parameters, allowing you to run comparisons.

More Information

For in-depth documentation, please explore the following resources:

Our team is constantly improving the evaluation system and works closely with users to ensure the best experience. If you would like personalized assistance or want to discuss custom evaluations, please schedule a call (opens in a new tab) with one of our founders.

Athina is more than just a monitoring platform; it's a comprehensive suite to supercharge your LLM development lifecycle. Leverage Athina's robust features to build AI applications that stand out due to their reliability and intelligence.